Using AI To Solve A Coding Challenge

Trying out Augment Code on the Spelling Correction Tool Coding Challenges.

Hi this is John with this week’s Coding Challenge.

🙏 Thank you for being one of the 91,420 software developers who have subscribed, I’m honoured to have you as a reader. 🎉

If there is a Coding Challenge you’d like to see, please let me know by replying to this email📧

Introduction

I get a lot of questions about AI replacing software engineers. They broadly fall into three categories:

Will AI replace software engineers entirely? Answer: Highly unlikely.

Will AI make software engineers more productive? Answer: It depends.

How should I use AI as a software engineer? Answer: It depends.

The “it depends” answers are where it gets interesting. I believe AI has the potential to make software engineers more productive if used well. So when Augment Code offered to sponsor an issue of Coding Challenges I thought it would be great to use their AI software development platform to tackle one of the coding challenges, testing out their solution and describing how I use AI to build software.

Augment Code claims to be the only coding agent with a Context Engine that understands how you build software and I was keen to see if it could work with me how I build software, in small steps using Test-Driven Development.

The Goal - Spelling Correction In Gleam

To really leverage the power of Augment Code’s AI I thought it would be interesting to use a new programming language that I’m learning (Gleam) and tackle the Spelling Correction Tool coding challenge.

Checking Out Augment Code

Signing up was quick and easy. It was a shame that I had to enter my credit card details to access the free trial. I signed in and installed the Visual Studio Code extension and then signed that in too. Getting me to this:

I chose to give the VS Code extension a go, but they also offer support for JetBrains, Vim, and Neovim. There’s also a chatbot and a CLI tool. I do really like that it allows me to use the IDE I want, unlike some of the competitors - that’s a big win in my mind!

Once the VS Code extension was read it was time to create a new repository for my spelling correction tool project and start building.

Building With Augment Code

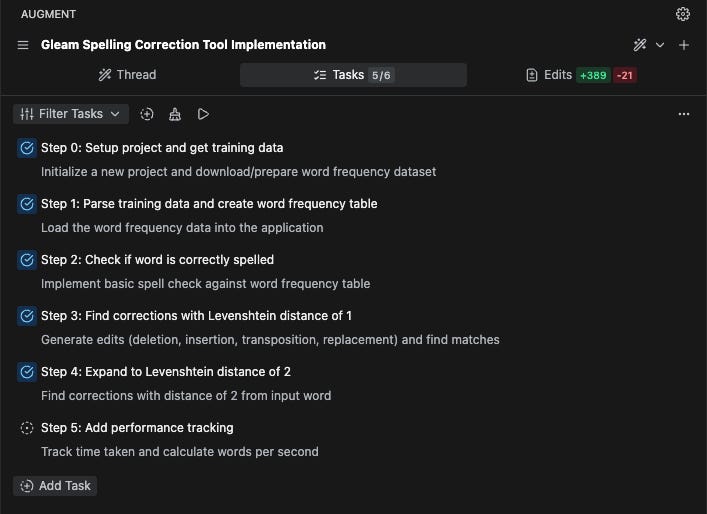

To get started leveraging the Context Engine I shared the URL of the spelling correction tool Coding Challenge with Augment Code. Rather like an overeager kid the agent them immediately set off to try and do it all itself. I’d have preferred it to take the project description provided as context for future reference rather than presuming it a prompt to go ahead and try to build it all in one shot. Not a problem though as it was easy enough to hit the stop button and start giving it clear instructions on how to work my way.

However, overeager as it was, it was great to see that it picked out the steps of the project and created a task list from them:

From there it was relatively simple to explain how I wanted to work: one step at a time using test-driven development.

There were a few challenges along the way, it struggled to get the training data and kept trying to search the Internet for other resources instead of simply generating a curl command to download it from the supplied URL.

It worked through steps 1 to 5 of the coding challenge effectively, settling into a rhythm of:

AI repeats the brief for the step.

AI writes some tests for the required changes.

I review the tests and highlight any missing cases or errors in the tests.

Iterate on tests until I am satisfied.

Generate code to pass the tests.

Verify code complies with the requirements of the step.

I really liked that after completing a couple of steps of the coding challenge the agent learned the process and began to offer the next step without prompting.

After a few hours of working together, part of which was me learning to use Augment Code’s interface I had a working solution to the coding challenge, written in Gleam. Only the second program I’ve ever created in Gleam.

I’m confident that I could build on and maintain the codebase in future too. That’s a big positive of my experience with Augment Code, and perhaps a reflection of their focus on creating a Context Engine. Their engineer summed up the Context Engine:

“Often when the agent is trying to do something, something similar has been done before. We want to learn from that thing that was done before and adapt it to a new situation.”

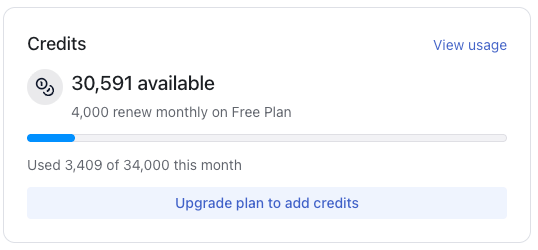

To get this far I used around 10% of the credits from the trial, far less than I expected given the amount of work it did. I suspect that if I had used it daily in full-time software development role the $60/month plan would cover my usage.

I really liked that it was clear how much I’d used and that on the pricing page they clearly detail how many credits each tier gets. On top of that in the FAQ they explain roughly what that level of credits will enable:

“A small task that uses 10 tool calls would cost around 300 credits.

A complex task that requires 60 tool calls would cost around 4,300 credits”

When I compared other similar tools they all seemed to be determined to make it difficult to determine what the actual cost of using the tools would be. Augment Code wins on clarity here.

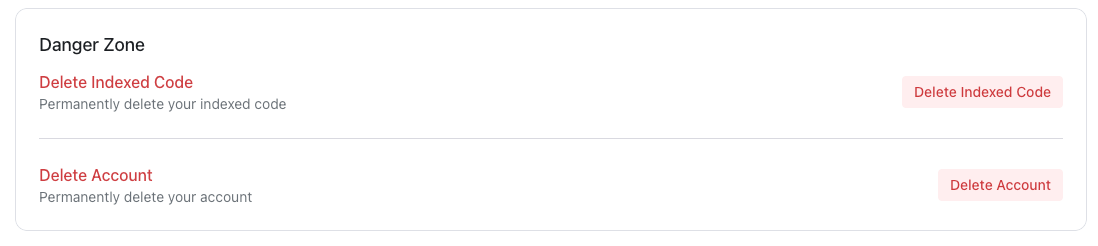

There’s a nice clean accounts page that details your usage and billing cycle and I was pleased to see that there is an option to delete my indexed code and account clearly available on the subscription page.

So, were does that leave us on questions from the introduction?

Will AI replace software engineers entirely? No it is not going to replace software engineers. Yes it can write code, but software engineering is so much more than that.

Will AI make software engineers more productive? Yes as software engineers learn to leverage AI tools like Augment Code and as the tools improve there is huge scope for us to become more productive.

How should I use AI as a software engineer? You should use AI as a tool that augment’s your capability and the capability of the team.

These AI tools can write code and they can write good, maintainable and testable code, but they still need a competent software engineer to spot where the code is drifting away from that or that tests are wrong/optimistic.

They’re a great tool when used by experience software engineers. So if you haven’t already done so, run some experiments using AI to help you and your team build software. If you’re not using AI yet, or you’re not getting the results you want from it, I recommend checking out Augment Code’s free trial. Use it to build either a new tool to help your team or a new feature in your existing codebase.

If I were picking a tool for my team I would favour Augment Code for the fact that it allows the team members to continue using their favourite IDE whether NeoVim, VS Code or from JetBrains and for the fact I would have clearer understanding of what I was buying and what it was going to cost me.

I am enjoying using Augment Code and I will be continuing to use it to build solutions to several more of the Coding Challenges in Gleam.

Thanks and happy coding!

John

P.S. If You Enjoy Coding Challenges Here Are Four Ways You Can Help Support It

Refer a friend or colleague to the newsletter. 🙏

Sign up for a paid subscription - think of it as buying me a coffee ☕️ twice a month, with the bonus that you also get 20% off any of my courses.

Buy one of my courses that walk you through a Coding Challenge.

Subscribe to the Coding Challenges YouTube channel!

Finally, thank you to Augment Code for sponsoring this issue of Coding Challenges and helping me to keep producing more projects and content for software engineers.

Never heard of Gleam before, what made you to learn it?